爬虫编写

item读取数据

1

2

3

4

5

6

7

8

9

10tr_list = response.xpath("//table[@class='tablelist']/tr")[1:-1]

for tr in tr_list:

item = {}

item['title'] = tr.xpath("./td[1]/a/text()").extract_first()

item['position'] = tr.xpath("./td[2]/text()").extract_first()

item['number'] = tr.xpath("./td[3]/text()").extract_first()

item['place'] = tr.xpath("./td[4]/text()").extract_first()

item['publish_date'] = tr.xpath("./td[5]/text()").extract_first()

logging.warning(item)

yield item构造Request对象实现翻页

1

2

3

4

5

6

7

8#下一页URL地址

next_url = response.xpath("//a[@id='next']/@href").extract_first()

if next_url != "javascript:;":

next_url = "http://hr.tencent.com/" + next_url

yield scrapy.Request(

next_url,

callback=self.parse

)1

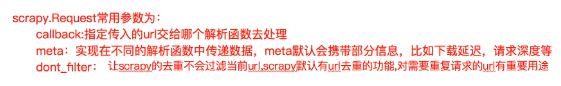

scrapy.Request(url[,callback,method='GET',headers,body,cookies,meta,dont_filter=False])

构造Request对象实现详情页爬取

1

2def parse_detail(self,response):

...